[2026 Tutorial] OpenAI Global Rate Limit Exceeded [6 Easy Fixes]

Reaching OpenAI's global rate limits can be frustrating when you're excited to continue using their powerful AI models. Suddenly finding your requests blocked mid-project is a real productivity killer. But don't worry, with a few simple fixes, you can modify your code and habits to stay within your rate limits.

In this article, we'll explain what OpenAI's rate limiting is, why it happens, and easy solutions you can implement in about 5 minutes to avoid disruptions. With some minor changes, you can ensure your projects leveraging OpenAI continue to run smoothly.

We'll cover optimizing your code, caching responses, setting up timers, and more. Read on to learn how to keep your OpenAI usage just under the thresholds so you can continue enjoying access to models like GPT-3. Let’s see how to fix openai global rate limit exceeded:

Part 1: What Does Global Rate Limit Exceeded Mean in OpenAI?

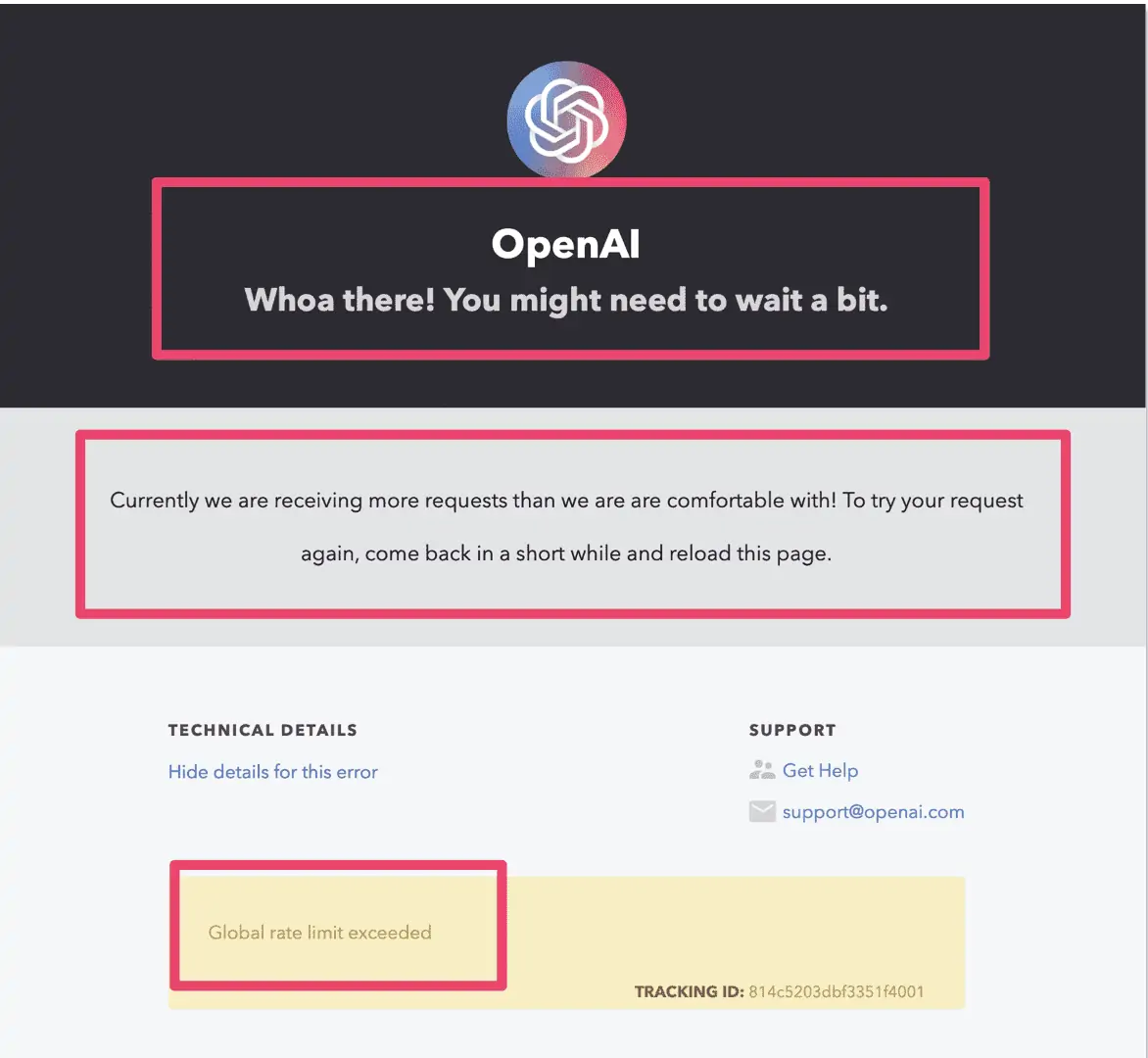

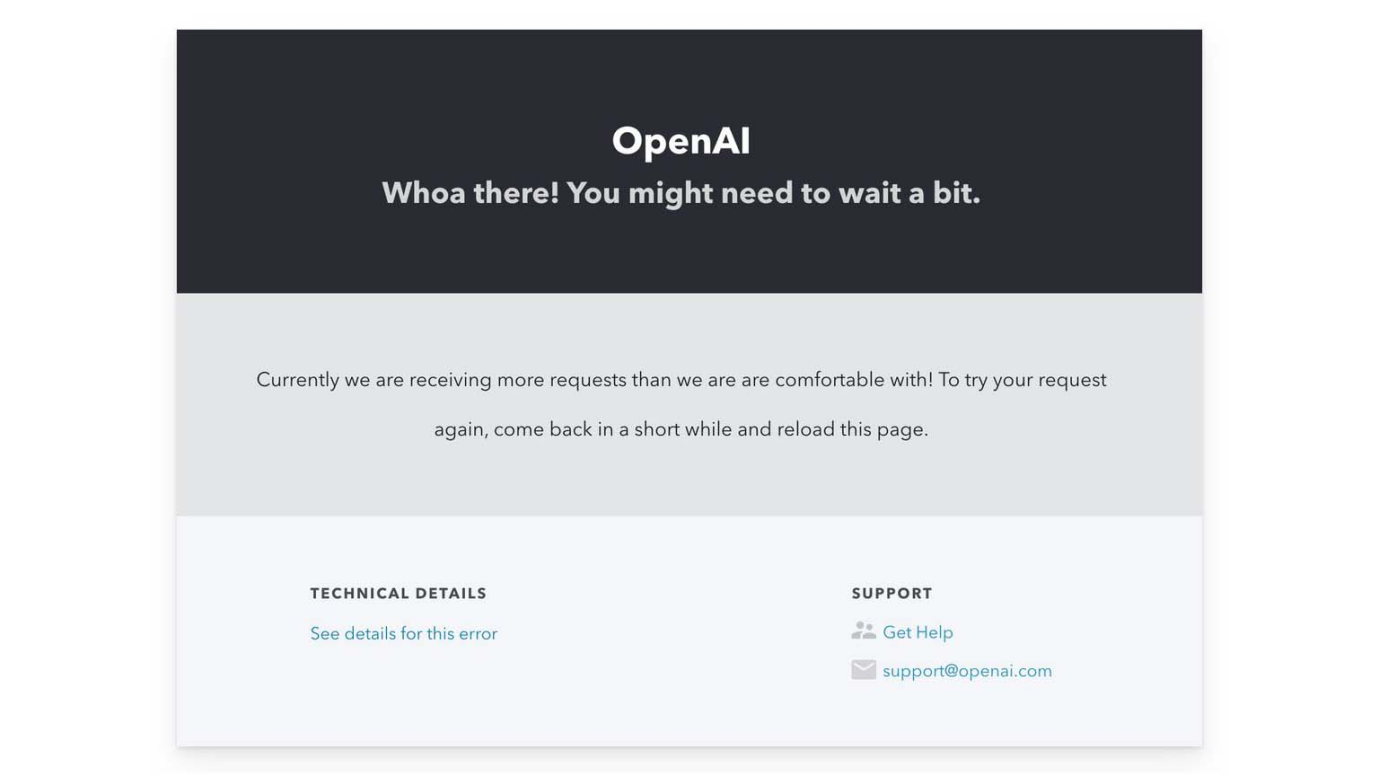

Global rate limit exceeded meaning is OpenAI's ChatGPT and other AI models have usage limits in place to handle capacity. When request traffic exceeds pre-set thresholds, OpenAI servers reach their maximum load and further requests start being denied with a "global rate limit exceeded" error.

Essentially, so many people are accessing ChatGPT simultaneously that OpenAI cannot keep up with the demand. The servers hit their total capacity to process natural language requests and have to start refusing connections.

This traffic overload is usually temporary during spikes of viral attention. But it renders ChatGPT and other models inaccessible for users receiving the chatgpt rate limit error.

Sometimes, you may also get a response like “chatgpt you are being rate limited.”

Part 2: Why Are You Getting “Global Rate Limit Exceeded” On OpenAI?

The primary trigger for the "chat gpt global rate limit exceeded" message is simply too many people accessing OpenAI models simultaneously. ChatGPT, in particular, recently went viral with millions of new users flooding servers in just days. This sudden immense spike in traffic far surpassed OpenAI's capacity thresholds.

Essentially, the servers hit maximum load and had to start denying requests to avoid crashing completely. OpenAI accounts have usage quotas, with free tiers having lower allowances than paid plans. But even paid customers can run into rate limits during traffic surges.

Other contributing factors include users setting up programs and loops calling the API too frequently without any throttling or timers built in. As well as systems failing to implement caching of model responses, causing redundant requests.

Part 3:[6 Best Fixes ] How To Fix Global Rate Limit Exceeded On OpenAI

When you encounter the frustrating "global rate limit exceeded" errors, there are several straightforward troubleshooting steps you can take to get back up and running.

By making minor tweaks and exercising some patience, you can often bypass temporary capacity issues on OpenAI's side. Try these 6 methods to fix global rate limits and restore access:

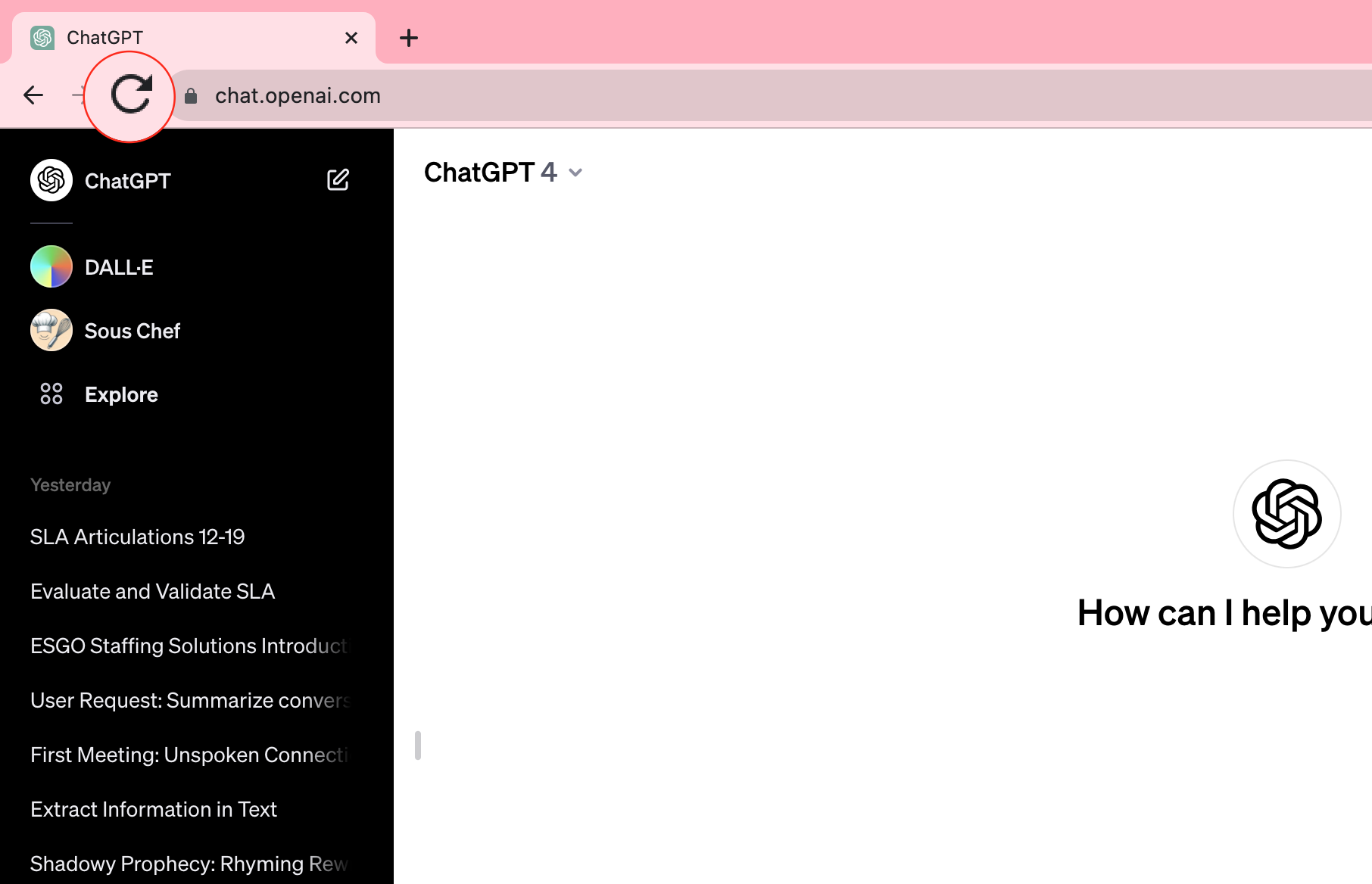

Fix 1: Reload Your Page

Refreshing your browser tab by pressing "reload" or control/command+R forces a fresh new request, which can reset transient server errors like rate limits. Browser caches can store outdated capacity messages, so refreshing grabs the latest status. This takes just a click and forces an entirely new document load.

If the limits were temporary, this tricks the server into thinking it's a new session. Often, with traffic spikes, relaxing the requests for even 60 seconds allows OpenAI's infrastructure to catch up.

Fix 2: Re-Login

Signing fully out of your account and then logging back in immediately generates a new valid authentication token. Sometimes, the existing token gets stale after encountering too many capacity denial errors. Getting a brand new token syncs your credentials freshly with OpenAI's API.

The servers see this as a fully new session, discarding any rate limit history associated with the old token. As long as you don't lose capabilities on the account level, this quick logout/login cycle taps a clean slate.

Fix 3: Wait It Out

During major viral events driving lots of traffic to OpenAI, usage can spike far faster than capacity expands. Even healthy, sizable buffers get drained rapidly by floods of new users. If you encounter rate limits despite code tweaks on your end, the cause is likely a temporary surge passing OpenAI's thresholds.

In these cases, taking a break and waiting an hour or two allows the tidal wave to settle. Think of it as allowing buffers to refill after everyone rushed the gates. Have some tea, take a walk, or sleep on it. When you return, there's a good chance capabilities have caught up.

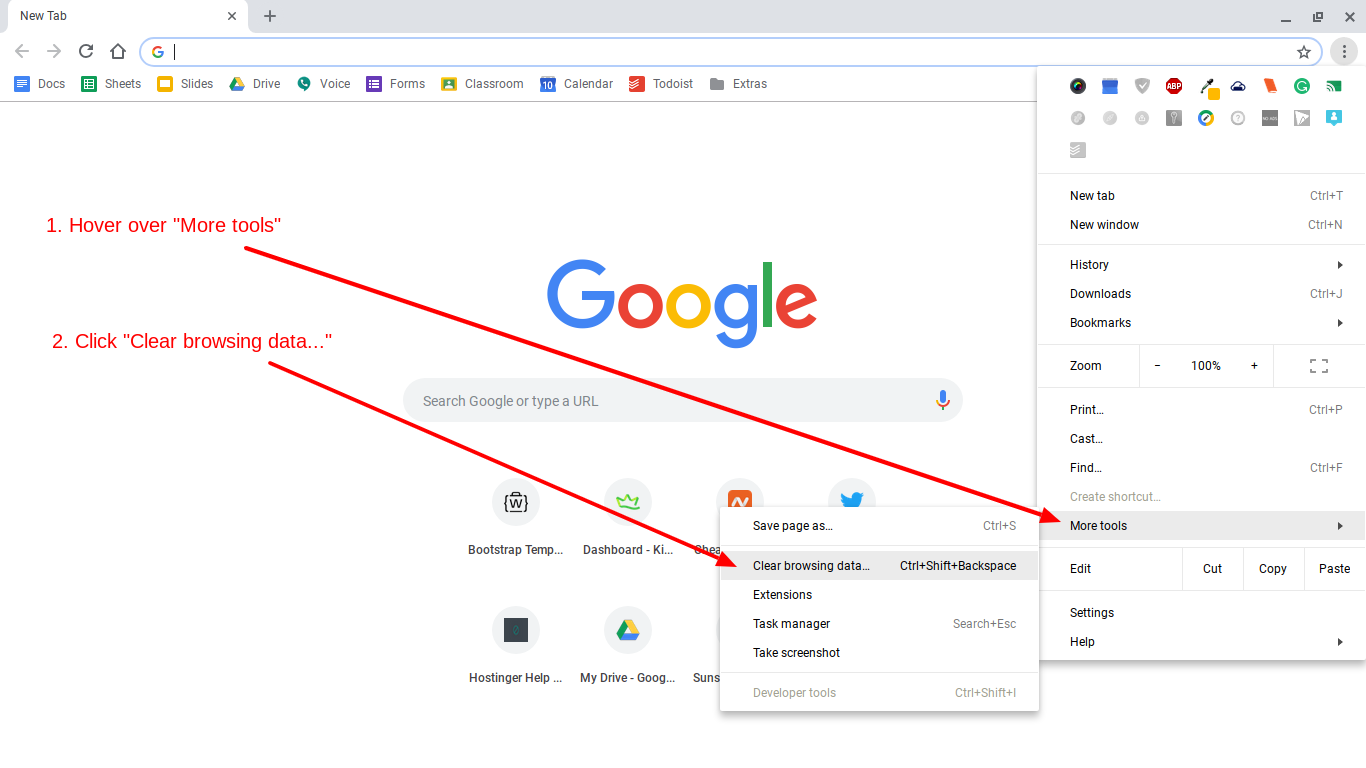

Fix 4: Clear Browser Data

Wiping your browser history, cookies, cache, and other saved data forces a completely clean slate, which can solve conflicts causing rate limits. Sometimes cluttered storage interacts oddly with OpenAI integrations. Or you might have old data interfering with new tokens.

Clearing everything resets any bad state. It only takes a minute or two to refresh a totally blank browser state. Just re-navigate back to OpenAI afterwards with no leftovers that could be creating hassles.

Fix 5: Check Server Status

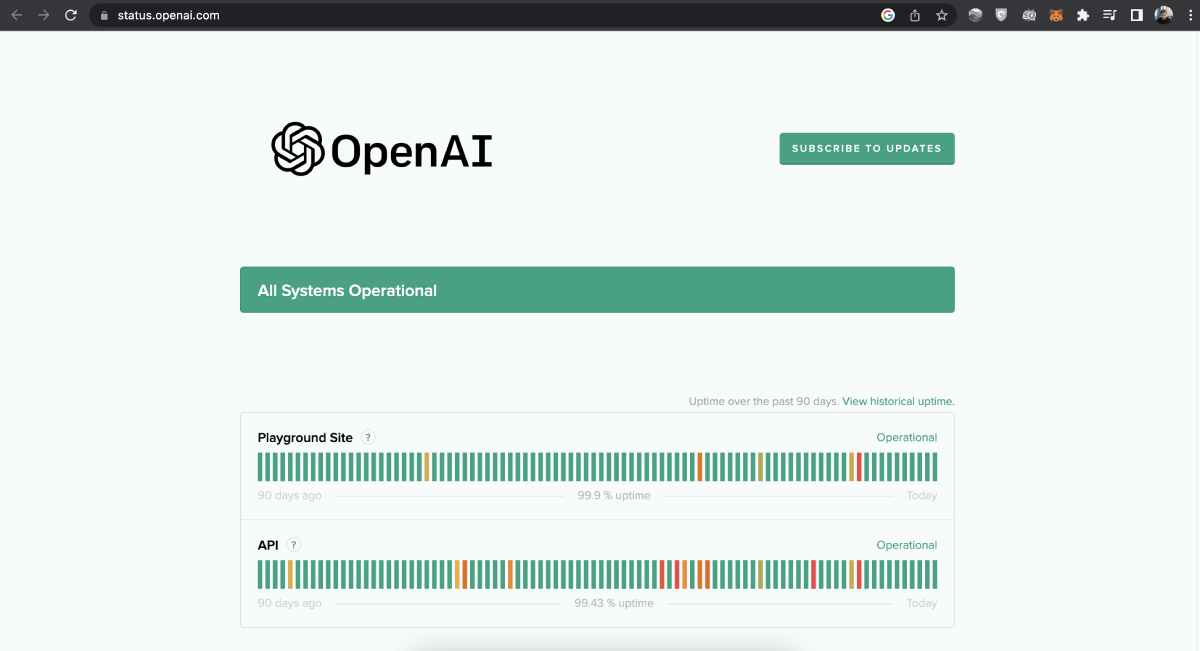

It never hurts to quickly check https://status.openai.com/ for any service degradation or maintenance windows scheduled. Even healthy sites need downtime for upgrades, migrations, and fixing issues. If you notice timeframes for work relevant to APIs you use, that explains why your requests get blocked.

There's no workaround but waiting for completion when changes are actively in progress. Knowing official status timelines helps set expectations. Bookmark the page to check anytime odd errors arise to see if OpenAI sees disruption on their end.

Fix 6: Contact Support

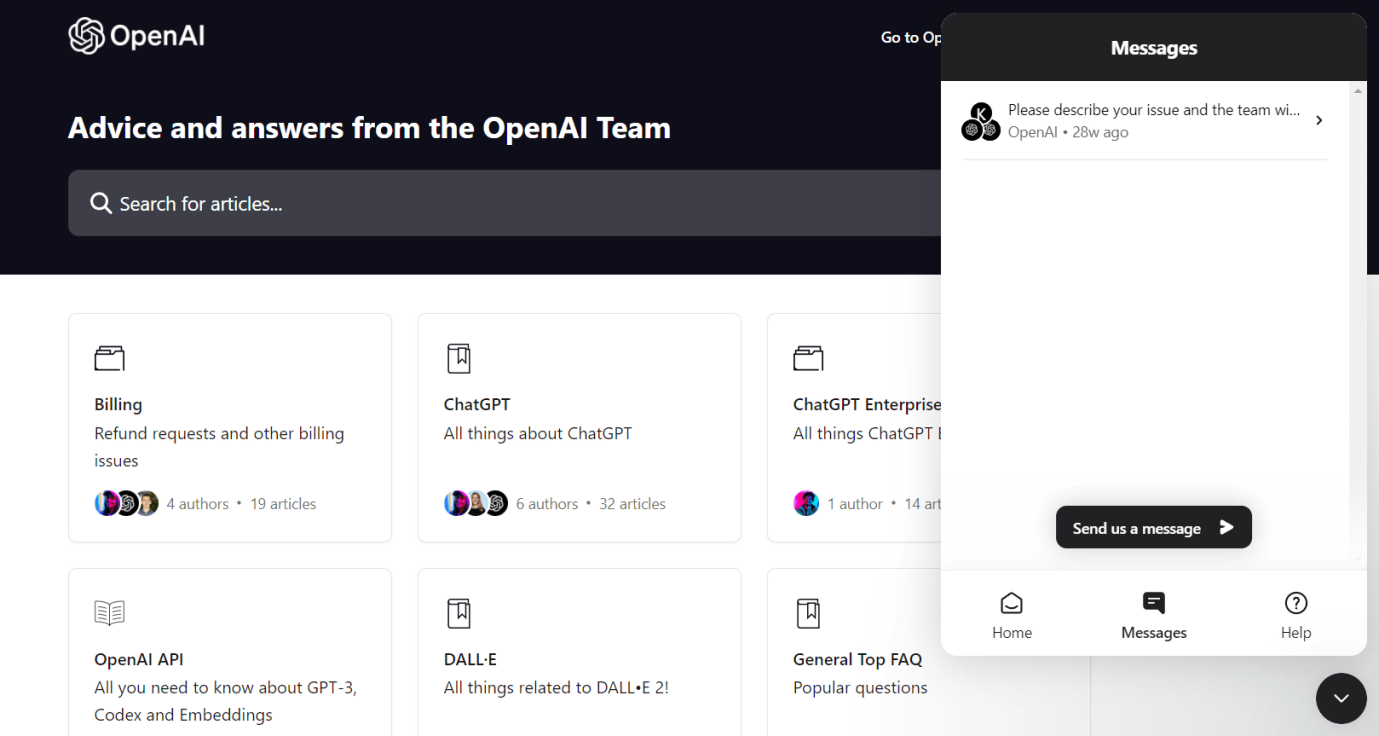

At the end of the day, explaining your rate limit issues directly to OpenAI customer support can reveal additional solutions. Support specialists have inside access for troubleshooting odd account-specific cases. Detail when the problems started, steps attempted already, relevant error messages, etc.

Part 4:Bonus Tip: How to Chat with Your PDF Files Using AI?

As a bonus productivity hack, you can actually "chat" with PDF documents using smart AI services. Tools like Tenorshare AI Chat PDF utilize powerful machine learning models in the backend to analyze upload PDF files. Instead of having to manually parse through pages and pages yourself, you can simply ask questions to the AI.

The AI almost reads the PDF for you on-demand based on custom questions you have. It delivers quick answers without you needing to waste time digging.

Features

- Accurate Summarization - Al models can condense key points from lengthy PDFs into concise overviews.

- Custom Querying - Ask specific questions about PDF contents and get precise answers extracted.

- Optical Character Recognition - Text scanned from images gets converted to machine-readable format.

- Natural Language Processing - Language algorithms allow querying PDFs in everyday human terms.

Steps

Step 1: Begin by uploading your desired PDF document to the AI chat platform.

Step2: 2.Next, you will need to register for a free account if you haven't already. Choose a username and password to create your account. This allows the platform to store your uploaded documents.

Step 3: 3.Once logged into your new account, you can start querying the PDF right away. Simply type a question into the chat interface as if conversing with a person. For example ask "what is this document mainly discussing?" or "what were the key conclusions?"

FAQs about OpenAI Global Rate Limit Exceeded Error

Q1: How to create an Account or login into OpenAI ChatGPT?

Here is how you can easily create an account on OpenAI to use ChatGPT:

- Go to openai.com and find account creation options

- Enter email address and phone number as prompted

- Complete identity confirmation steps

- Receive account created confirmation

- Log into OpenAI products with new credentials

Q2: Why is GPT not working? Is ChatGPT at capacity now?

Yes, ChatGPT reached maximum processing capacity due to a viral influx of interest. Tens of millions of new users flooded access in just days, overwhelming OpenAI's infrastructure. It results in global rate limit exceeded dat power.

Q3: How do you avoid rate limit in OpenAI API?

The best ways to avoid hitting OpenAI API rate limits are implementing throttles and delays in your client code, caching responses instead of redundant requests, moving logic client-side where possible, and optimizing how many rapid follow-up queries you have in sequence.

Final Words

Hitting OpenAI rate limits can halt projects relying on models like ChatGPT, but with simple fixes, you can get back up and running quickly. Now you know what does rate limit exceeded mean on ChatGPT?

Follow the 6 solutions covered to refresh throttles, wait out spikes, clear conflicting data, and confirm system status.

Exercising these best practices will help avoid disruptions, while still allowing you to enjoy the benefits of OpenAI as capacity expands.

Additionally, check out Tenorshare Al - PDF Tool chat tool for easily querying summaries and insights from PDFs using natural language. Avoid exhaustive manual document analysis.

You Might Also Like

- [Tutorial] How to Send PDF through Text on iPhone/Android

- OpenAI AI Text Classifier | Things You Should Know

- 6 Best PDF Reader for Windows 11 – Top Tools for Seamless Viewing

- 4 Best Free PDF Markup Software - Easy to Use

- Humata AI Review: AI PDF Assistant for Research

- How to Add Bookmarks to PDF files via PDF Bookmark Editors

- How to Use AI to Extract Data from PDF Documents